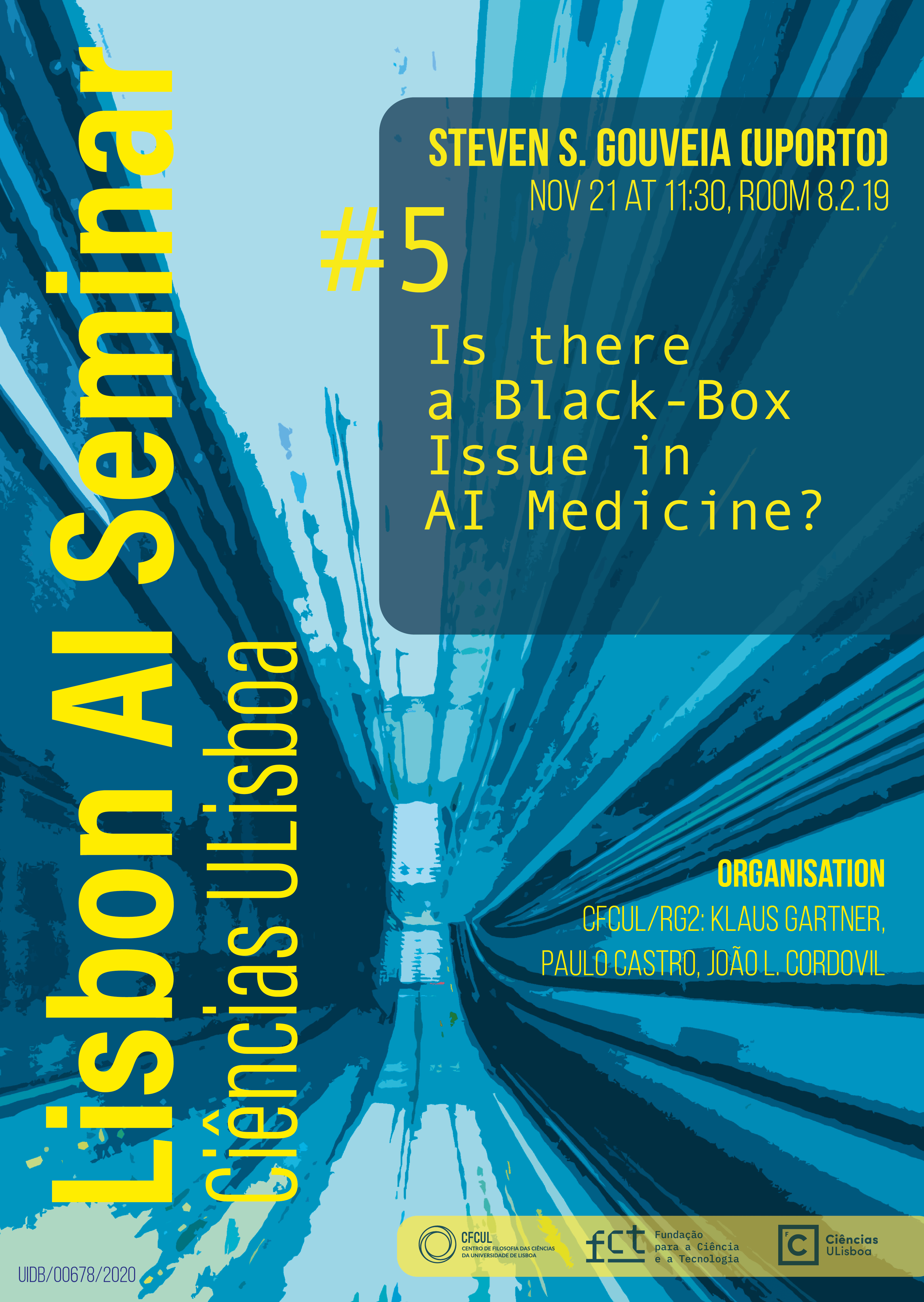

The challenge of comprehending how AI algorithms generate judgments or predictions on the one hand, and how they integrate particular inputs to create a particular result on the other, is usually referred to as the “black-box issue” in AI Medicine. Although this type of technology can produce results that are more accurate and efficient than what we can refer to as “Traditional Medicine,” that is, a medicine made by humans, the internal workings of these models are typically opaque or challenging for humans to interpret. Due to the unique structural characteristics of AI models, it can be challenging for clinicians and other healthcare professionals to understand why an AI model recommends a particular diagnosis or course of treatment. This can raise questions about the model’s validity, safety, and ethical implications. Furthermore, since new medical treatments and diagnoses are frequently based on mechanistic explanations that AI models do not allow access to, a lack of transparency can also delay their discovery. Take into account, for example, an illustration of an AI model built to identify malignant cells in medical imaging. Large datasets of medical images are used to train these models, which then utilize this information to predict the appearance of malignant cells in new, unobserved images. Although these models are remarkably accurate, they frequently function as “black boxes,” which prevent humans from seeing or easily understanding the decisions they make. For instance, it might not be evident what exact traits the model is employing to distinguish between malignant and normal cells, or how the model arrived at a specific diagnosis or treatment suggestion. This can be particularly problematic in healthcare settings where professionals and patients must comprehend the reasoning behind a diagnosis or treatment strategy. Furthermore, it is difficult to identify and stop potential biases and discrimination if the model is opaque, making its correction impossible. This talk aims to determine whether there is, in fact, a “black box” issue in AI medicine and, if so, how we may think of possible solutions to address it.

Nota biográfica

Steven S. Gouveia holds a PhD in Philosophy from the University of Minho (Portugal) and is currently leading a 6-year research project on the Ethics of Artificial Intelligence in Medicine at the Mind, Language and Action Group of the Institute of Philosophy of the University of Porto (Portugal), being also an Honorary Professor of the Faculty of Medicine Andrés Bello (Chile). He has published and edited 14 academic books on interdisciplinary topics. He also organized several online courses with the participation of prominent scholars such as Noam Chomsky, David Chalmers, Sir Roger Penrose or Anil Seth. He hosted and produced the documentary “The Age of Artificial Intelligence”. More information: stevensgouveia.weebly.com.

Sobre o Lisbon AI Seminar

O Lisbon AI Seminar é uma reunião científica interdisciplinar, periódica, que tem como objetivo apresentar temas ligados à Filosofia da Inteligência Artificial a partir de domínios científicos diversos, das Ciências da Computação, à Física e à Biologia e às Ciências Sociais e Humanas. O seminário ocorre mensalmente com a duração entre 1h e 1h30 no formato misto, presencial e por zoom, tendo habitualmente um orador convidado. Com o Lisbon AI Seminar pretende-se ampliar a área de investigação em Filosofia da Computação e da Inteligência Artificial iniciada no CFCUL em 2022, procurando-se fomentar a reflexão e debate em torno da natureza e impacto da Inteligência Artificial nas sociedades contemporâneas. Pretende-se tornar a discussão abrangente, recenseando preocupações gerais, tais como a implementação artificial da mente e suas consequências, o impacto da IA na produção de conhecimento científico, as implicações éticas das aplicações da IA, ou sobre os efeitos sociais, económicos e políticos da IA.

Informações

O seminário será realizado em formato híbrido: presencialmente, na sala 8.2.19, edifício C8 da Faculdade de Ciências, e em videoconferência, via Zoom.

Link Zoom

https://videoconf-colibri.zoom.us/j/95352592762?pwd=eGdBY0w1ckdGcGFxRUE3TmlIdHJ5UT09

Password: 864198

Morada Ciências ULisboa, Sala 8.2.19

Faculdade de Ciências da Universidade de Lisboa

Edifício C8, Piso 2

Campo Grande, Lisboa